Privacy Erased: Roblox's New Age Verification Demands Intrusive Facial Biometrics from Children

Roblox's Risky Rollout: Trading Teen Privacy for Biometric Surveillance

Beyond "Unfiltered Chat": The Alarming Reality of Roblox's Biometric Data Grab

Roblox, the immensely popular online platform, is rolling out a new feature called "Trusted Connections" aimed at allowing older teens and adults to chat more freely. However, this initiative comes with significant inherent dangers, primarily stemming from its reliance on facial scans and government IDs for age verification, and the promise of "unfiltered" chats, even with "critical harm" monitoring in place. While framed as a safety measure, these changes open a Pandora's box of privacy violations, data security risks, and the potential for increased exposure to harmful content.

The Perils of Biometric Data Collection from Minors

The cornerstone of "Trusted Connections" is the mandatory age verification via video selfie analysis or government ID. This directly involves the collection of sensitive biometric data, even from users as young as 13.

The risks associated with collecting and storing such immutable personal information, especially from minors, are manifold:

- Irreversible Compromise: Unlike passwords or usernames, biometric data like facial geometry cannot be changed if compromised in a data breach. A breach of Roblox's or their partner Persona's systems could expose millions of users to lifelong risks of identity theft and fraudulent impersonation.

- Privacy Erosion and Surveillance: The collection of facial scans contributes to a growing database of biometric information on young users. This raises concerns about potential surveillance, tracking, and the creation of detailed profiles on individuals, blurring the lines between online activity and real-world identity. Even with claims of data deletion after 30 days, the potential for misuse or retention "where legally required" leaves a dangerous loophole.

- Vulnerability to Advanced Attacks: Biometric systems, while seemingly secure, are not infallible. As highlighted by cybersecurity experts, they are susceptible to sophisticated attacks like "deepfakes" and adversarial AI, which could be used to bypass age verification, potentially allowing underage users access to unfiltered chats or, conversely, enabling bad actors to impersonate legitimate users.

- Lack of Informed Consent for Minors: While Roblox states there will be options for "verified parental consent" in the future, the immediate implementation relies on teens themselves submitting facial scans. It is questionable whether a 13-year-old can truly provide fully informed consent for the collection and processing of their biometric data, understanding the long-term implications.

"Unfiltered Chats" and the Illusion of Safety

The promise of "unfiltered" chats within "Trusted Connections" is deeply concerning, despite Roblox's assurance of "critical harm" monitoring. The very nature of "unfiltered" implies a significant loosening of moderation, and relying solely on post-event monitoring for "critical harm" is a reactive, rather than proactive, safety measure.

- Defining "Critical Harm": The broad definition of "critical harm," encompassing "predatory behavior," "sexualization of minors," and "inappropriate sexual conversations," while important, leaves a vast grey area. What constitutes "inappropriate" versus merely "unfiltered"? The subjective nature of this definition could lead to inconsistencies in enforcement and allow harmful content to persist, particularly in the rapid-fire environment of online chat.

- Reactive vs. Proactive Safety: Monitoring for "critical harm" means the harm must occur before it is detected and addressed. This places children and teens at immediate risk, potentially exposing them to grooming, cyberbullying, hate speech, or the exchange of illegal content before intervention can take place.

- Limitations of AI Monitoring: While AI can be a powerful tool for content moderation, it is not foolproof. AI models can be bypassed, tricked, or may fail to grasp nuanced human conversation, especially in a truly "unfiltered" environment. The ability for users to communicate sensitive or harmful content using coded language or subtle cues might evade automated detection.

- Real-Life Connections and Off-Platform Risks: The provision allowing teens to add adults they "know in real life" via QR codes or contact import, while seemingly designed to connect existing relationships, also presents a vulnerability. This could facilitate the transition of potentially harmful offline relationships into the less-monitored online space, or create pressure for teens to add individuals they barely know in person.

Broader Implications and The Slippery Slope

Roblox's move is part of a broader industry trend towards age verification, often spurred by legislative pressure. However, the chosen methods and the implications for user privacy warrant serious scrutiny.

- Normalization of Biometric Scans: By implementing facial scans for a platform popular with young users, Roblox normalizes the collection of biometric data at an early age. This could pave the way for more pervasive biometric requirements across online services, eroding privacy expectations for an entire generation.

- The "Security vs. Privacy" Debate: While enhanced safety for minors is a commendable goal, the methods employed by Roblox lean heavily on invasive data collection, potentially sacrificing individual privacy for perceived security. A true balance requires prioritizing privacy-preserving technologies and comprehensive, proactive content moderation, rather than relying on reactive monitoring of "unfiltered" spaces.

- Potential for Abuse and Mission Creep: Any system designed to collect and verify sensitive personal information carries the inherent risk of mission creep. Data collected for one purpose could, in the future, be repurposed or accessed for other uses, potentially by law enforcement or other entities, raising concerns about individual liberties and digital rights.

In conclusion, while Roblox's stated intention is to create safer spaces, the implementation of facial scans and unfiltered chats for "Trusted Connections" introduces a range of significant and inherent dangers. These include substantial privacy risks due to biometric data collection from minors, the reactive and potentially insufficient nature of "critical harm" monitoring in unfiltered environments, and the broader societal implications of normalizing intrusive age verification methods. It is crucial for users, parents, and policymakers to critically evaluate these measures and demand solutions that truly prioritize safety without compromising fundamental privacy rights.

Advice for Parents: Guarding the Digital Frontier

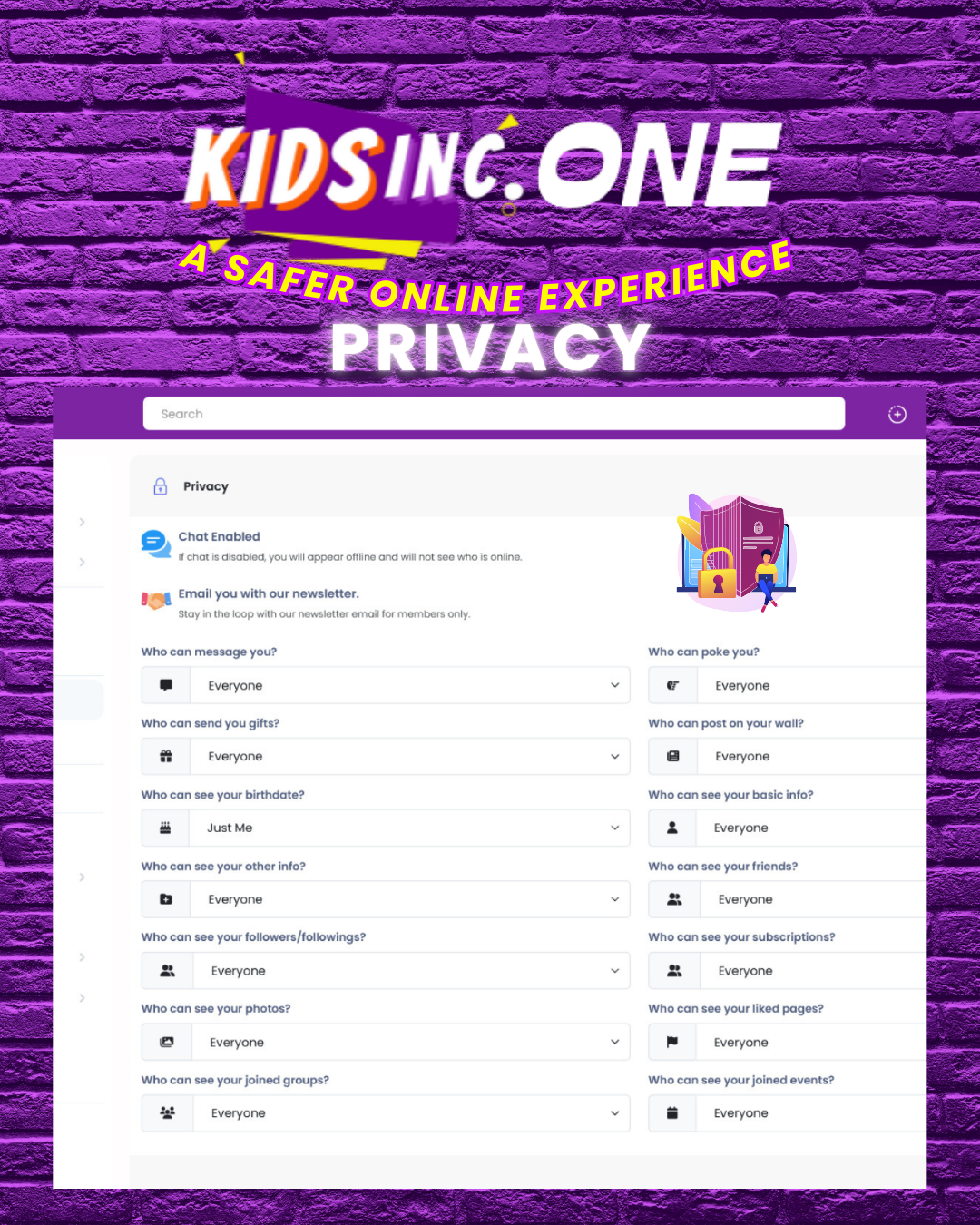

For parents seeking safer options, we invite you to join our private network, kidsinc.ONE.

The digital landscape can feel overwhelming, but parents are the first line of defense. Proactive engagement and open communication are key.

- Educate Yourself and Your Child: Stay informed about the apps your children use and their privacy policies. Discuss online risks openly and honestly with your kids, explaining why certain information should remain private. Use simple language and real-life scenarios.

- Use Parental Control Tools Wisely: Consider reputable parental control software that can help manage screen time, filter content, and monitor for concerning activity. Remember, these are tools to aid, not replace, communication.

- Harden Privacy Settings: Sit down with your child and go through the privacy settings on all their social media and gaming accounts. Default settings are rarely the most private.

- TikTok Specifics: Utilize TikTok's "Family Pairing" feature to link your account to your child's. This allows you to set daily screen time limits, enable Restricted Mode, control discoverability (private vs. public account), manage direct messages (DMs), and filter content.

- No Unsupervised Downloads: Require permission before your child downloads any new apps or games. Research new platforms thoroughly before allowing access.

- Device-Level Permissions: Regularly review app permissions on your child's device (e.g., access to contacts, microphone, camera, location services). Disable anything that isn't absolutely essential for the app's core function.

- Common Areas for Devices: Keep devices used by younger children in common areas of the home, allowing for natural supervision.

- Strong Passwords and 2FA: Teach your children about creating strong, unique passwords for every account and enable two-factor authentication (2FA) wherever possible. Consider a family password manager.

- Understand In-App Browsers: Explain that clicking a link within an app might open it in an internal browser that lacks the security features of a standalone browser and could be monitoring their activity. Encourage opening sensitive links in external, secure browsers.

- Model Good Behavior: Children learn by example. Be mindful of your own digital habits, privacy settings, and the information you share online.

Safety Tips for Kids: Navigating Your Digital World

Your online world is exciting, but it also has rules to keep you safe. Think of these as your superhero guide to the internet!

- Think Before You Post/Share: Once something is online, it's very hard to take back. Ask yourself: "Would I be okay with my parents, teachers, or even a future boss seeing this?" If not, don't share it.

- Keep Personal Info Private: Never share your full name, home address, school name, phone number, or where you hang out without a trusted adult's permission. Your personal information is like money – don't give it away easily!

- Passwords Are Your Secret Superpower: Never share your passwords with anyone, except your parents. Make them long and strong, using a mix of letters, numbers, and symbols.

- "Friends" Online Might Not Be Friends in Real Life: Be very careful about who you accept as a "connection" or "follower." Not everyone online is who they say they are. Never agree to meet someone you only know online without your parents' explicit permission and supervision.

- Be Wary of Links and Downloads: If a message or link looks weird or comes from someone you don't know, don't click on it! It could be a trick to get your information or put a virus on your device. Always ask a parent first.

- "Unfiltered" Doesn't Mean "Safe": If an app or chat says it's "unfiltered," it means some of the usual safety checks are off. This can expose you to things that aren't good for you. If something makes you feel uncomfortable, confused, or scared, tell a trusted adult right away.

- Know How to Block and Report: If someone is being mean, asking for inappropriate things, or making you uncomfortable, block them immediately and report them to the platform. Don't engage with them.

- If Something Feels Wrong, Tell an Adult: Trust your gut feeling. If anything online makes you feel uneasy, worried, or anxious, talk to a parent, teacher, or another trusted adult. They are there to help you, not to judge.

- Regular Privacy Checks: Ask your parents to help you check your privacy settings on all your apps regularly. Make sure you know who can see your posts and send you messages.